Table of Links

-

Proposed Approach

C. Formulation of MLR from the Perspective of Distances to Hyperplanes

H. Computation of Canonical Representation

3 PROPOSED APPROACH

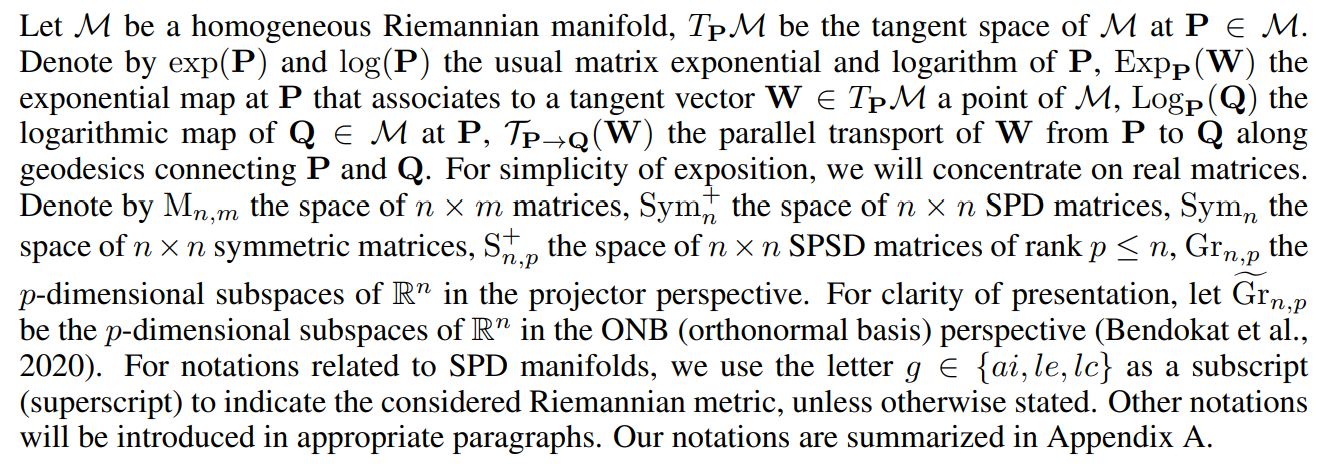

3.1 NOTATION

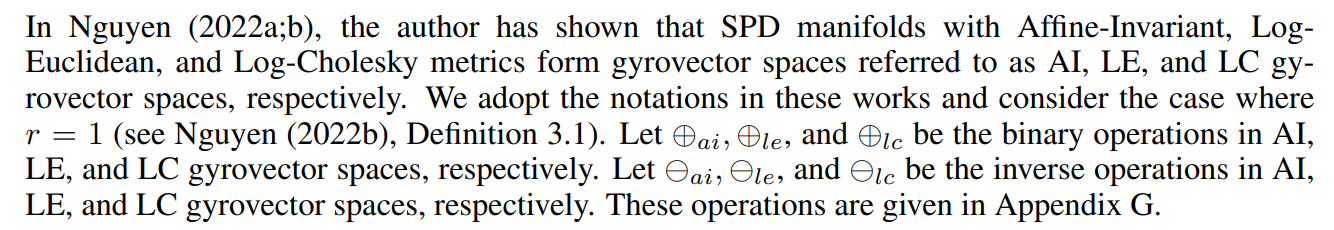

3.2 NEURAL NETWORKS ON SPD MANIFOLDS

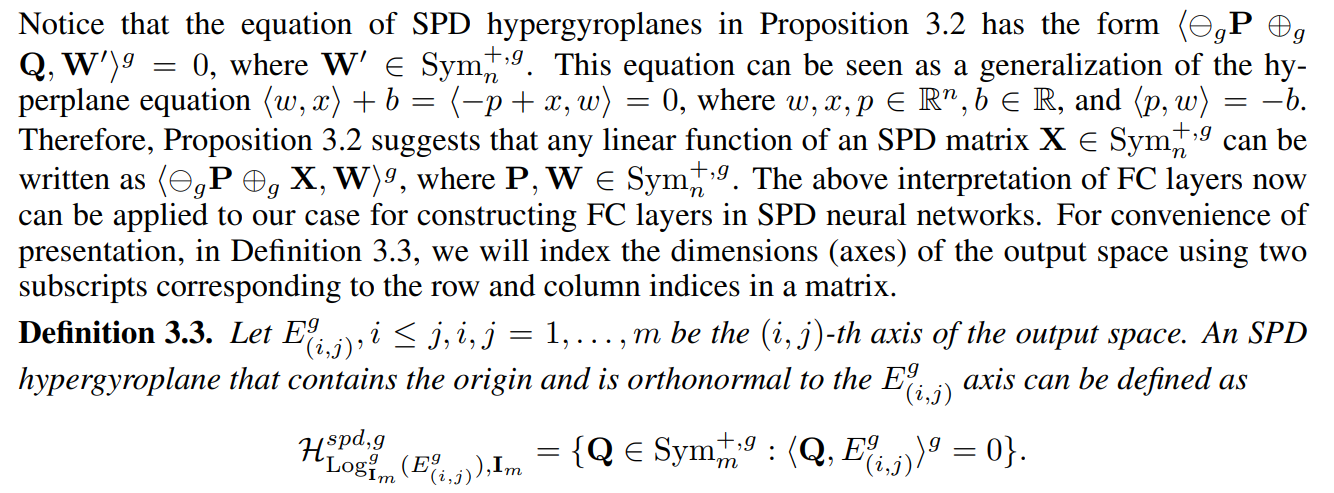

3.2.1 FC LAYERS IN SPD NEURAL NETWORKS

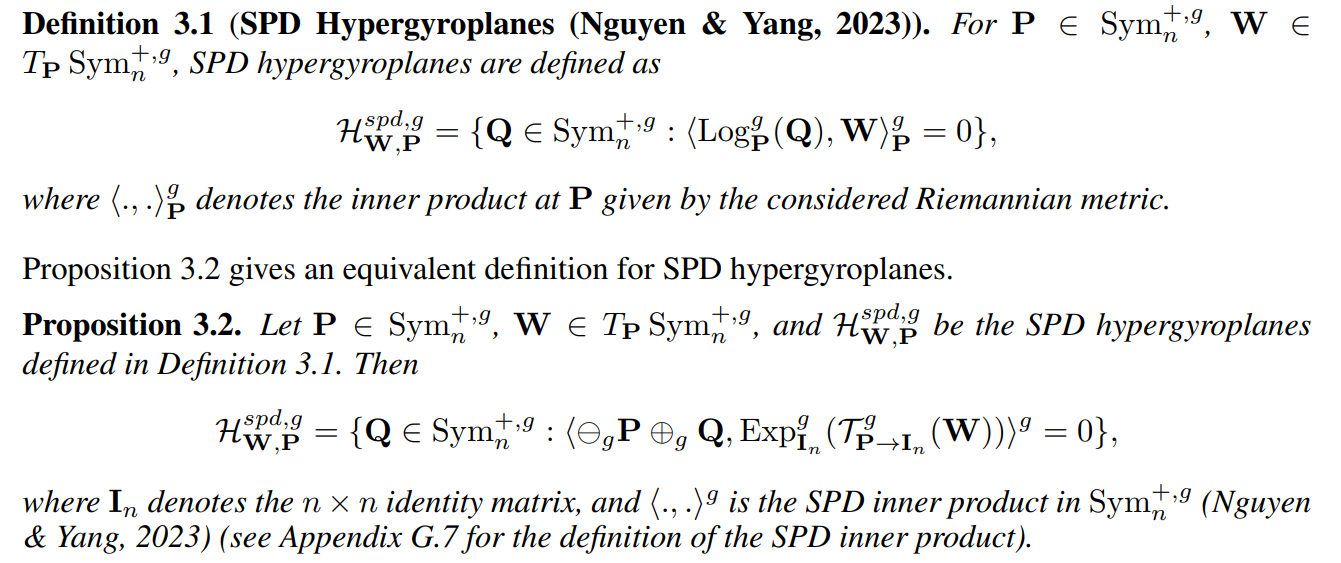

Our method for generalizing FC layers to the SPD manifold setting relies on a reformulation of SPD hypergyroplanes (Nguyen & Yang, 2023). We first recap the definition of SPD hypergyroplanes.

Proof See Appendix I.

In DNNs, an FC layer linearly transforms the input in such a way that the k-th dimension of the output corresponds to the signed distance from the output to the hyperplane that contains the origin and is orthonormal to the k-th axis of the output space. This interpretation has proven useful in generalizing FC layers to the hyperbolic setting (Shimizu et al., 2021).

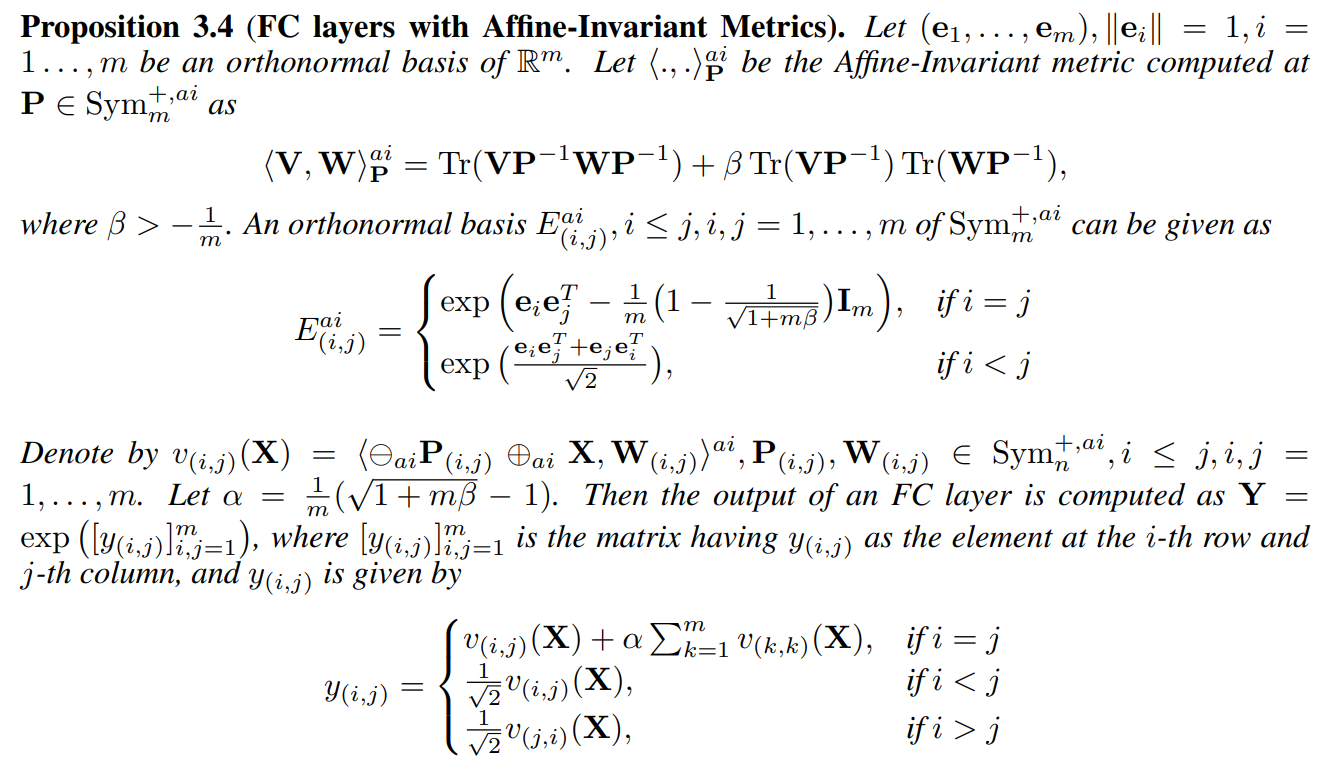

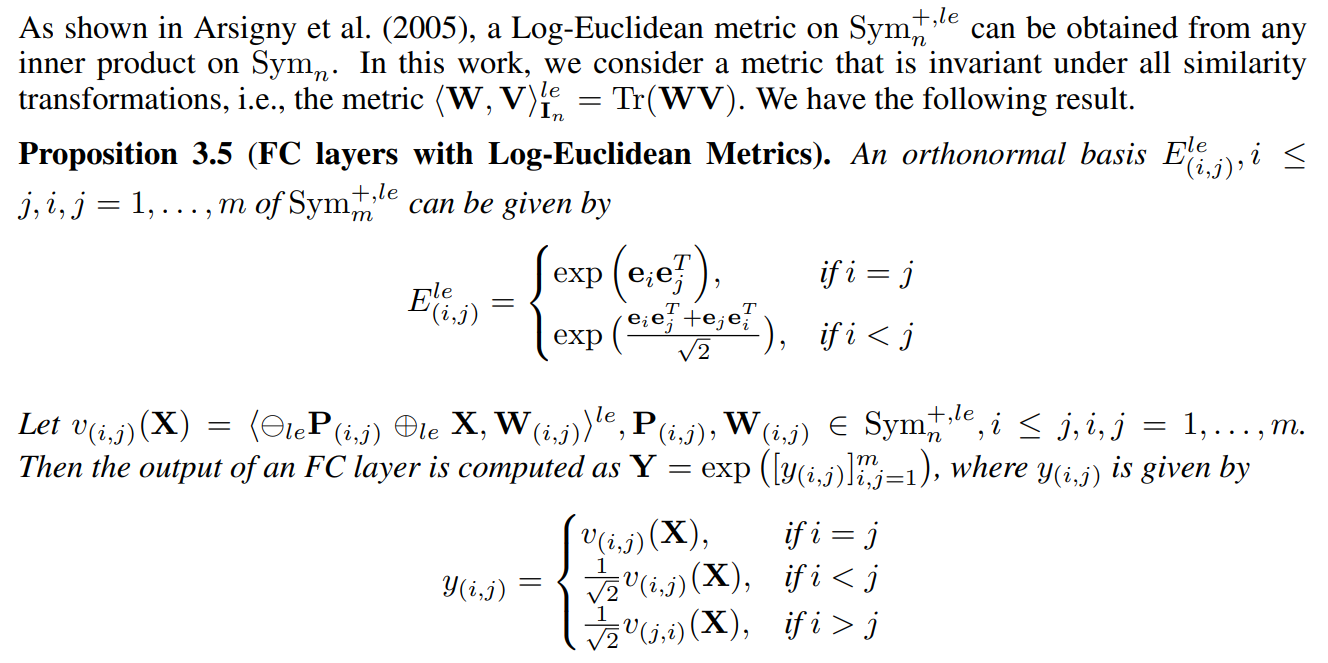

It remains to specify an orthonormal basis for each family of the considered Riemannian metrics of SPD manifolds. Proposition 3.4 gives such an orthonormal basis for AI gyrovector spaces along with the expression for the output of FC layers with Affine-Invariant metrics.

Proof See Appendix J.

Proof See Appendix K.

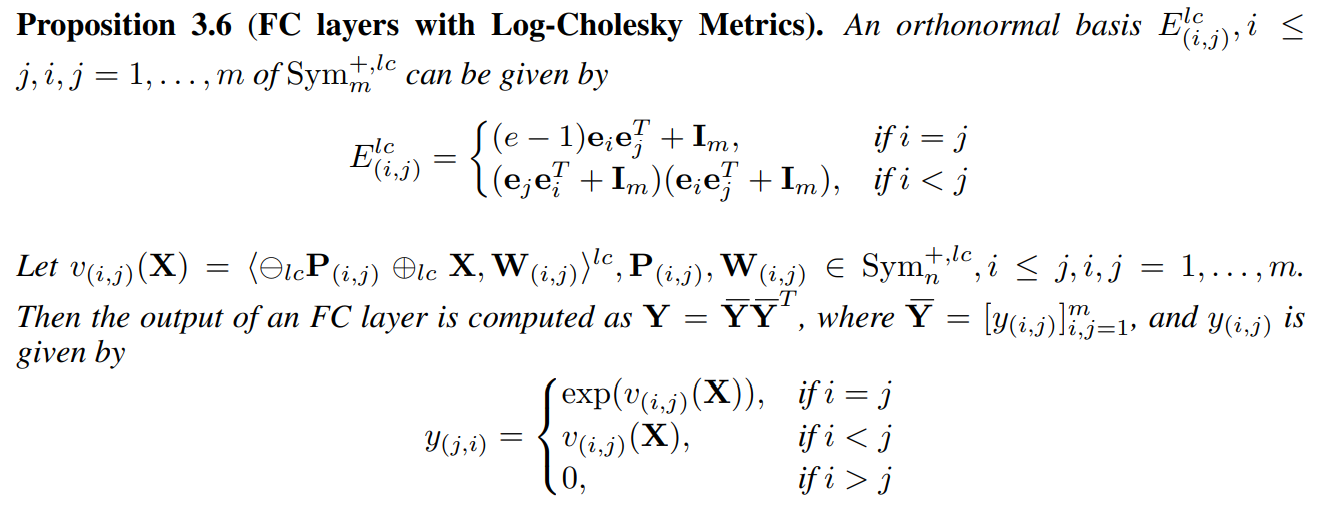

Finally, we give the characterization of an orthonormal basis for LC gyrovector spaces and the expression for the output of FC layers with Log-Cholesky metrics.

Proof See Appendix L.

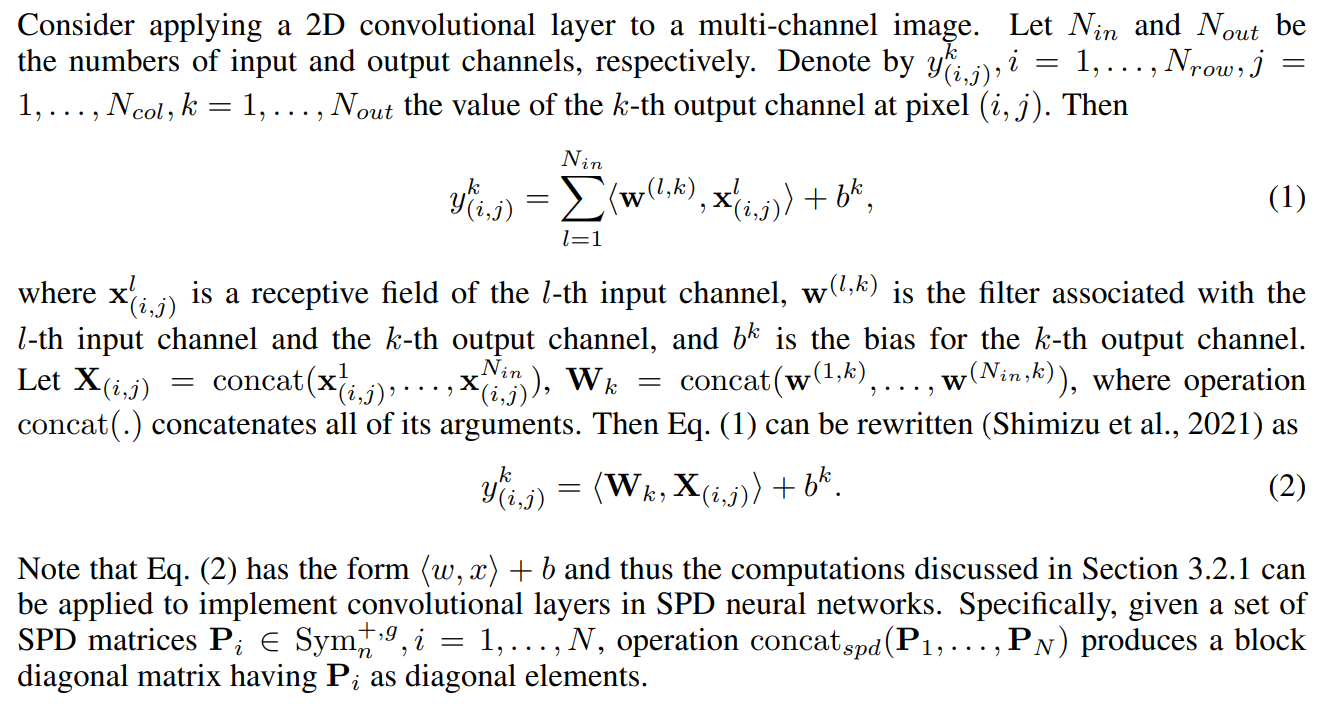

3.2.2 CONVOLUTIONAL LAYERS IN SPD NEURAL NETWORKS

In Chakraborty et al. (2020), the authors design a convolution operation for SPD neural networks. However, their method is based on the concept of weighted Frechet Mean, while ours is built upon ´ the concepts of SPD hypergyroplane and SPD pseudo-gyrodistance from an SPD matrix to an SPD hypergyroplane (Nguyen & Yang, 2023). Also, our convolution operation can be used for dimensionality reduction, while theirs always produces an output of the same dimension as the inputs.

Authors:

(1) Xuan Son Nguyen, ETIS, UMR 8051, CY Cergy Paris University, ENSEA, CNRS, France ([email protected]);

(2) Shuo Yang, ETIS, UMR 8051, CY Cergy Paris University, ENSEA, CNRS, France ([email protected]);

(3) Aymeric Histace, ETIS, UMR 8051, CY Cergy Paris University, ENSEA, CNRS, France ([email protected]).

This paper is